HPC compute environments

Tower streamlines the deployment of Nextflow pipelines into both cloud-based and on-prem HPC clusters and supports compute environment creation for the following management and scheduling solutions:

- Altair PBS Pro

- Altair Grid Engine

- IBM Spectrum LSF (Load Sharing Facility)

- Moab

- Slurm

Requirements

To launch pipelines into an HPC cluster from Tower, the following requirements must be satisfied:

- The cluster should allow outbound connections to the Tower web service.

- The cluster queue used to run the Nextflow head job must be able to submit cluster jobs.

- The Nextflow runtime version 21.02.0-edge (or later) must be installed on the cluster.

Compute environment

To create a new HPC compute environment in Tower:

-

In a workspace, select Compute environments and then New environment.

-

Enter a descriptive name for this environment.

-

Select your HPC environment from the Platform dropdown menu.

-

Select your credentials, or select + and SSH or Tower Agent to add new credentials.

-

Enter a name for the credentials.

-

Enter the absolute path of the Work directory to be used on the cluster.

-

Enter the absolute path of the Launch directory to be used on the cluster. If omitted, it will be the same as the work directory.

-

Enter the Login hostname. This is usually the hostname or public IP address of the cluster's login node.

-

Enter the Head queue name. This is the default cluster queue to which the Nextflow job will be submitted.

-

Enter the Compute queue name. This is the default cluster queue to which the Nextflow job will submit tasks.

-

Expand Staging options to include optional pre- or post-run Bash scripts that execute before or after the Nextflow pipeline execution in your environment.

-

Use the Environment variables option to specify custom environment variables for the Head job and/or Compute jobs.

-

Configure any advanced options described below, as needed.

-

Select Create to finalize the creation of the compute environment.

Jump to the documentation for launching pipelines.

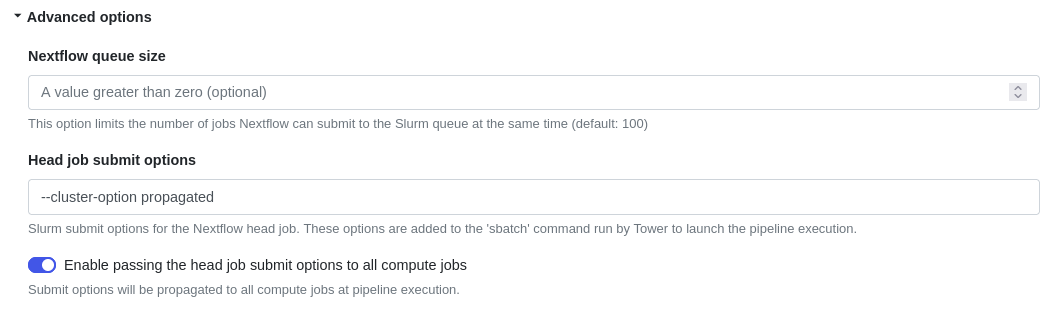

Advanced options

-

Use the Nextflow queue size to limit the number of jobs that Nextflow can submit to the scheduler at the same time.

-

Use the Head job submit options to specify platform-specific submit options for the head job. You can optionally apply these options to compute jobs as well:

Once set during compute environment creation, these options cannot be overridden at pipeline launch time.

IBM LSF additional advanced options

- Use Unit for memory limits, Per job memory limits, and Per task reserve to control how memory is requested for Nextflow jobs.